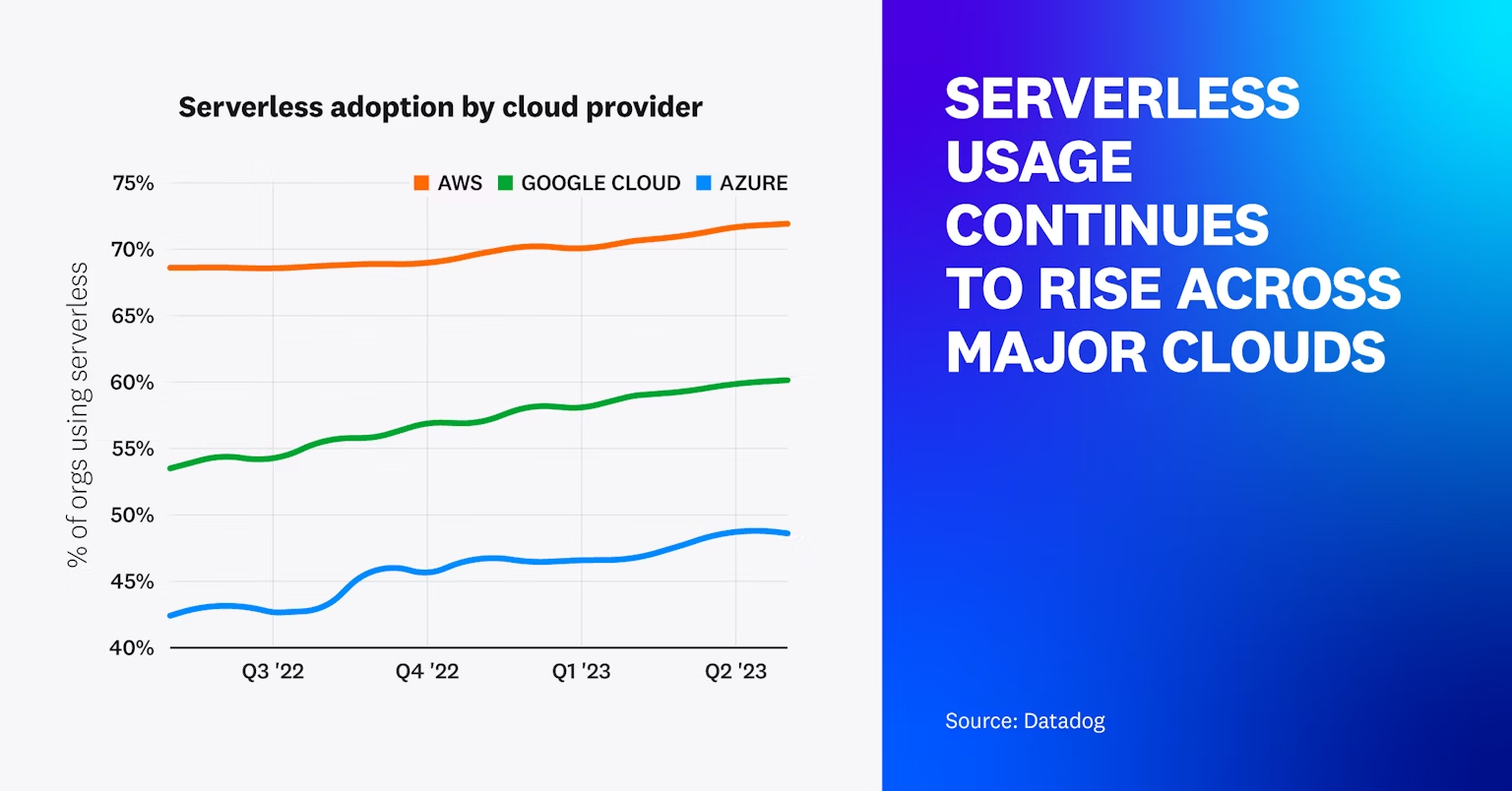

Is serverless computing truly the future of cloud technology, or just another passing trend? Initially hailed as a transformative technology, serverless computing promised to free businesses from the burdens of server maintenance, scaling issues, and infrastructure costs. As of 2023, the majority of organizations leveraging the AWS and Google Cloud had at least one serverless deployment (as per Datadog’s State of Serverless report).

Yet, as more companies adopted this paradigm, they faced unexpected hurdles like escalating expenses, vendor lock-in, and intricate debugging. So, is this truly the best solution for every business, or does it come with its own set of challenges for yours? In this blog, we’ll explore these complexities by looking at both the rise and gradual decline of serverless computing, helping you make an informed decision.

The Rise of Serverless Computing

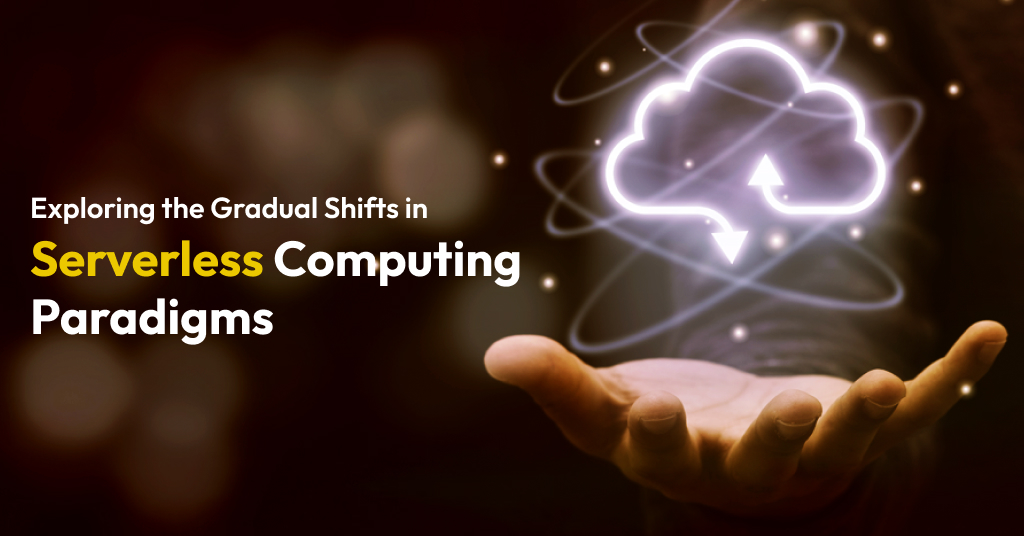

Soon after SaaS (software-as-a-service) gained traction in the early 2000s, cloud providers like AWS started offering IaaS (infrastructure-as-a-service) through EC2 in 2006. It was an on-demand model where cloud service providers offered on-demand machine resources. This pushed developers to simply focus on their code, integrating diverse services, approaches, and techniques without worrying about server administration.

This development (application of serverless computing in a cloud context) was further cemented by the introduction of Google App Engine, a PaaS (platform-as-a-service), in 2008. It allowed developers to build and deploy applications without concerning the underlying hardware/system.

Soon after, there was a container Era. With CaaS (container-as-a-service), developers could have numerous containers with entirely different tech stacks and dependencies running in isolated silos on the same machine.

A few years later, in 2014, Amazon Web Services established the serverless paradigm by launching AWS Lambda, emphasizing its ability to run code in response to events without managing servers. This shift to providing FaaS (function-as-a-service) meant users only paid when the function was running.

How Does Serverless Computing Work?

Serverless computing redefined how applications were developed, deployed, and managed. While the developers focused on their code, cloud providers managed all backend infrastructure, including server provisioning, maintenance, and scaling. Here are some key features of serverless computing:

Event-Driven Execution

Serverless computing operates on an event basis where functions have to be triggered by specific requests/actions. For instance, uploading a file to cloud storage could automatically trigger a function to process that file.

Stateless Functions

The functions offered in serverless computing are stateless, i.e., their invocation is independent. Calling them does not require any knowledge of previous calls unless explicitly managed through external storage.

Automatic Scaling

Platforms that offer serverless computing can handle the scaling of functions as per the incoming request load. This means if a function needs to be run multiple times simultaneously, the serverless platform can allocate more resources to handle it and then scale down when the demand decreases.

Inherently High Availability

Serverless computing platforms can replicate and distribute functions across multiple geographic regions if needed. This makes them highly available as the application remains operational even if one region is down.

The Consequent Benefits of Serverless Computing

- Reduces operational overhead by eliminating hardware and server management

- Enhances cost efficiency with a pay-for-what-you-use pricing model

- Scales automatically without manual intervention

- Boosts developer productivity by focusing on code, not infrastructure

- Maintains operational continuity by distributing functions geographically

- Reduces time-to-market with streamlined deployment processes

Peak Adoption and Market Saturation in Serverless Computing

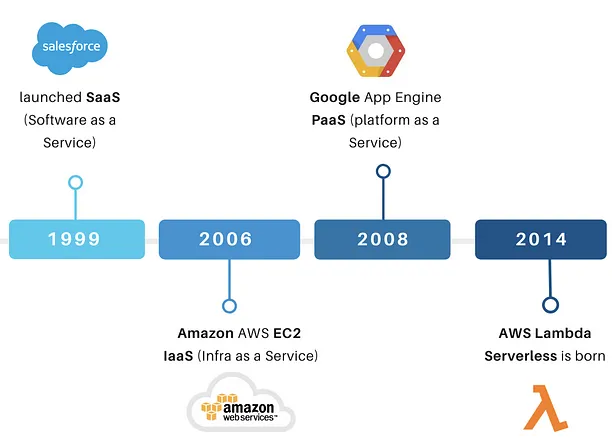

As serverless computing rose with a promise to reduce infrastructure management, it was quickly baked into the cloud development cake. This is evident from modestly increasing serverless adoption rates (as of Q2, 2023) for many organizations leveraging Azure (by 6%), AWS (by 3%), and Google Cloud (by 7%). (Source)

While serverless computing offers numerous advantages, focusing solely on them does not provide a complete understanding of its true impact. And perhaps this is why the initial enthusiasm for this technology started waning…

The Criticism

As more and more organizations started relying on serverless computing, its usage started broadening far beyond the original intent. Initially, it was introduced as a model where developers could deploy code without provisioning or managing servers— a big-time saver.

However, over time, the term was stretched to accommodate a variety of services, such as BaaS (backend-as-a-service), beyond the initial scope. Such services do help simplify some parts of managing servers, but they don’t completely take the task off developers’ hands. This blurs the original meaning of “serverless.”

The Decline of Serverless Computing

It wasn’t only the broadening of its utility that led to increased confusion around serverless computing. Several other factors accentuated the need for a comprehensive reevaluation and, in some cases, a decline in its adoption. Let’s uncover more.

Gradual Emergence of More Limitations

Over time, the limitations of serverless computing became more apparent. Issues such as the cold start problem, where functions take longer to execute after being idle, started surfacing. Additionally, companies faced difficulties in debugging and monitoring because of abstracted server management.

Rising Cost Concerns

Serverless computing initially emerged as a cost-effective solution for intermittent and small-scale operations. However, it proved to be an expensive solution for large-scale or continuous use due to its pay-for-what-you-use pricing model. Especially with consistent workloads and long-term commitments, it was adding significantly to the costs. This pushed organizations to reconsider their cloud model, preferring traditional over serverless.

Struggling to believe this while there’s still some buzz around serverless computing? The following will help you reason at least some part of it.

Amazon Prime Goes Back from Microservices to Monoliths

What happened?

Towards the end of 2023, Amazon shocked the cloud computing industry. It shifted Amazon Prime Video from

microservices and serverless instances to monolithic EC2 and ECS.

Why did it happen?

While (in theory) serverless computing would enable their teams to scale diverse services independently, the opposite happened. They ended up hitting a hard scaling limit only at 5% usage. Even optimizing individual components did not help much.

Portability Issues and Vendor Lock-Ins

Running serverless functions on specific clouds can complicate switching to different architectures due to each cloud’s unique proprietary configurations and tools.

For example, moving from AWS Lambda to Azure Functions is a complicated process.

- AWS Lambda uses an execution model with automated compute management, whereas Azure Functions offers two options: a Consumption Plan (similar to an execution model) and a Premium Plan.

- The former supports a broader range of programming languages, including Node.js, Python, Ruby, Java, Go, .NET Core, and custom runtimes. On the other hand, Azure Functions also supports a variety of languages but focuses on C#, F#, Java, JavaScript (Node.js), Python, PowerShell, and TypeScript.

Security and Compliance Challenges

In serverless computing, security responsibilities are shared: the cloud provider handles the infrastructure like hardware and operating systems, while the user manages the application’s code, data, and configurations. Such a division complicates compliance. For example, if data handling and storage are partly managed by the cloud provider, ensuring compliance with standards such as GDPR, HIPAA, or PCI-DSS requires close coordination of where the provider’s responsibilities end and the user’s begin.

The above shortcomings began to push serverless into the background, prompting organizations to consider other cloud models, such as edge computing, multi-cloud, and microcloud. Additionally, another development that facilitated its decline was the explosion of Generative AI.

Why Generative AI Challenges Serverless Computing Paradigms?

Generative AI has also influenced a shift away from using serverless computing for certain tasks.

Here are some key reasons behind this shift:

- Resource Requirements: Generative AI solutions require a lot of computing resources. Serverless computing, which excels in managing small, quick tasks, often doesn’t meet these high-resource demands.

- Data Volumes: Generative AI thrives on big data. It requires sophisticated tools to manage and manipulate vast datasets and train models. Serverless computing’s hands-off approach to infrastructure can make it challenging to handle such needs.

- Economic Considerations: While serverless is cost-effective for irregular and short-term usage, the continuous heavy usage of serverless resources typical in AI applications can add up to higher costs.

- The Need for Consistency: AI applications need consistent performance to function reliably, especially in critical environments. The variability in serverless computing, like occasional delays in starting up or scaling, might not align well with the needs of AI-integrated applications.

To effectively implement generative AI solutions, organizations often need to return to on-premises servers with advanced computing capabilities or explore alternative cloud models.

What’s Next in Cloud Computing?

As many organizations rethink their commitment to serverless computing, alternative approaches such as edge computing, microclouds, and multi-cloud strategies are also gaining prominence.

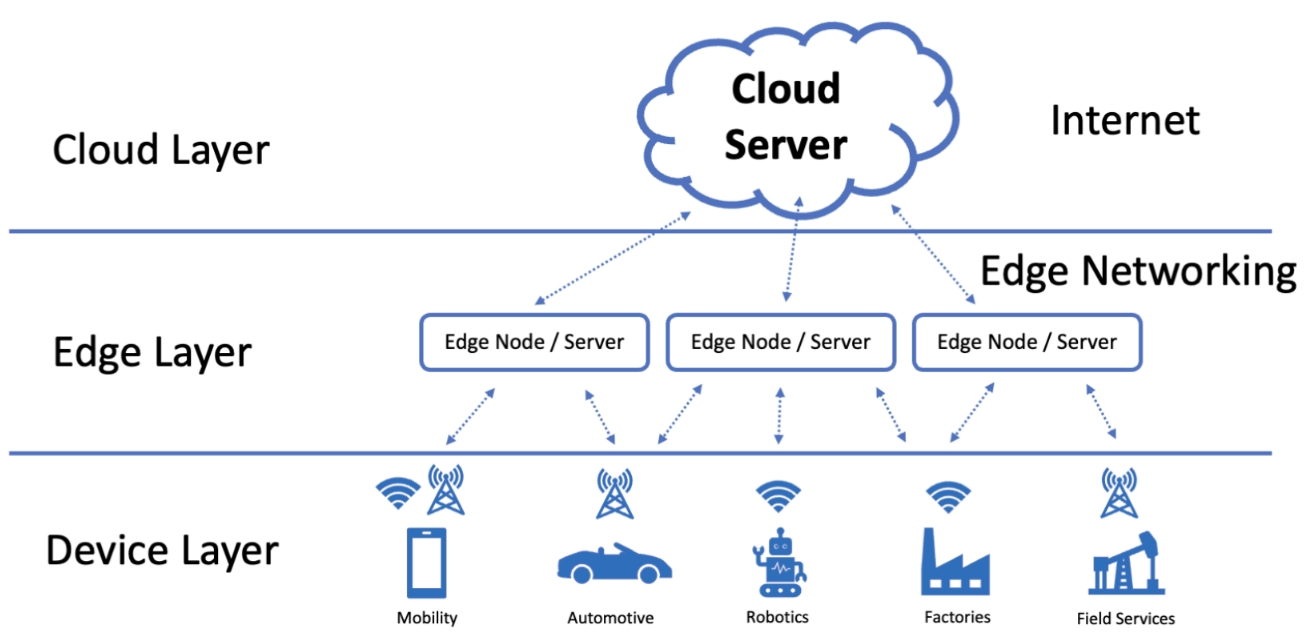

Edge Computing

As per IBM, edge computing is a distributed computing model that brings enterprise-grade applications closer to data sources such as IoT devices or local edge servers.” By allowing these applications to process this data at the “edge,” it helps reduce response times and bandwidth usage.

Edge computing addresses several shortcomings of serverless models:

- It reduces latency by processing data close to the source.

- It can operate in environments with limited connectivity.

- Localized data processing helps comply with data sovereignty laws and reduces security risks associated with transmitting sensitive information to a central cloud.

Many organizations are leveraging this technology, especially since the emergence of 5G. Consequently, this market is booming, with growth opportunities projected to amount to USD 350 million (in revenue) by 2027. (Source)

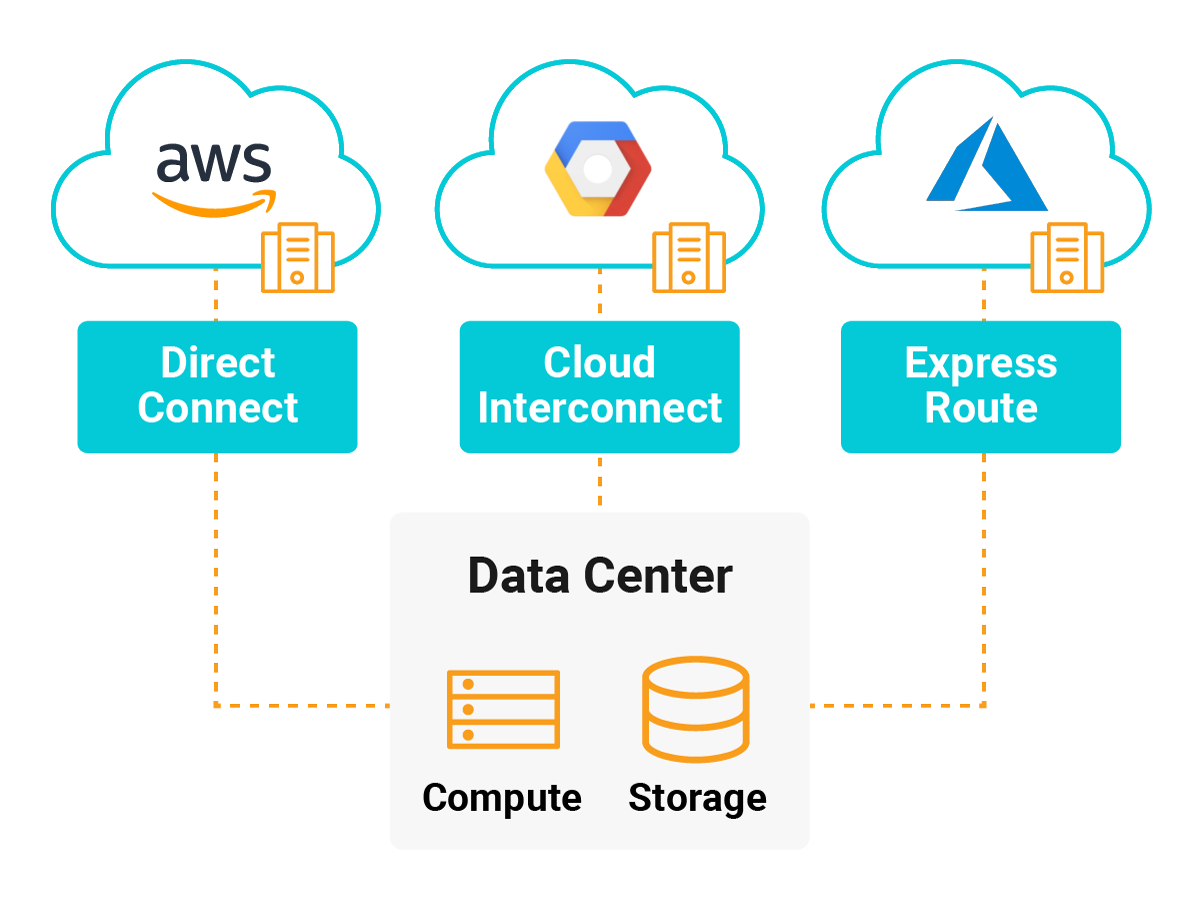

Multi-Cloud Computing

Mulit-cloud computing is another hot topic in the cloud segment. This approach involves using multiple cloud services from different providers in a single heterogeneous architecture, allowing users to benefit from unique features.

Multi-cloud computing helps in:

- Mitigating risks by distributing resources across multiple clouds.

- Helping organizations avoid vendor lock-ins.

- Optimizing performance by choosing specific services/functions that best meet their requirements.

As cloud services continue to evolve, tools and services that facilitate the management of multi-cloud environments are also improving. The future of multi-cloud computing is bright, with the market expected to exhibit a CAGR of 28% from 2023 to 2030. (Source)

Planning to Implementing Multi-Cloud Strategy?

Managing multiple cloud environments can be challenging. Our cloud experts can streamline your operations, ensuring efficiency and cost-effectiveness across all platforms.

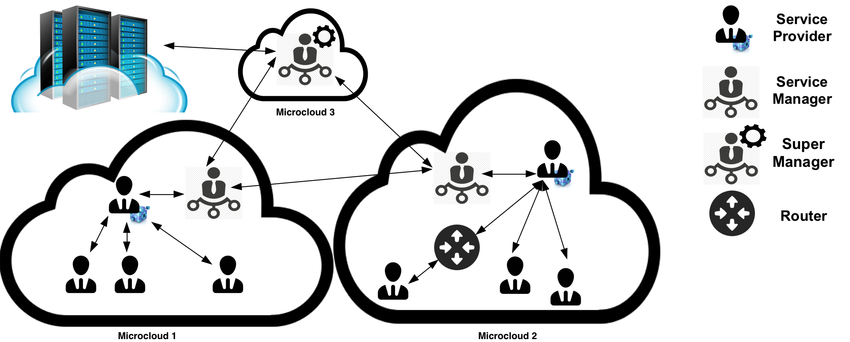

Mircroclouds

Another alternative to serverless computing that many companies have shifted to is microclouds. Microclouds are localized, small-scale cloud data center architectures that operate independently or as parts of a larger cloud infrastructure. This setup is particularly useful for handling local data processing tasks, providing cloud computing capabilities on a reduced scale closer to the end-users.

Microclouds also address a few of the limitations of serverless computing by offering

- Local data processing, minimizing the distance data must travel and reducing latency

- Decentralized cloud services for more control and reliability

- Easier compliance with local regulations as they store and process data within the same geographical areas as the source.

Microclouds are often a cost-effective solution, as these localized data centers can utilize rented GPUs, which are often less expensive than traditional cloud services. However, this ongoing need for powerful GPUs also introduces some uncertainty regarding the long-term viability of microclouds. Nevertheless, this relatively new approach opens new frontiers in cloud computing.

Takeaway

Computing has come a long way; transitioning from models like SaaS and IaaS to PaaS and serverless. Each of these models aims to deliver one thing—simplified computing by reducing concerns about the underlying technical and hardware dependencies. This is what led to the creation of serverless computing in the first place!

It started with a bang and, even today, continues to be a widely used model for several organizations. However, as we recognize its limitations and cloud technologies advance, serverless computing is being reevaluated in several contexts. But that’s not the end. The future has countless developments, opportunities, and business requirements. Who knows when the trends might shift again?